Coaxing USAMO Proofs From o3-mini-high

Decent USAMO performance may be closer than headline results suggest

The 2025 USA Mathematical Olympiad (USAMO) took place last week. This is the pinnacle of US high school math competitions. It spans two days, with contestants given given 4.5 hours to solve 3 problems on each day. Solutions to problems take the form of rigorous proofs, which are graded by human experts. Each solution is scored on a 0-7 scale, with partial credit given sparingly.

To qualify for the USAMO, a US high school student must do well on two prior competitions. First comes the AMC 12, which any student may take. Students with top scores on that qualify for the AIME. The top ≈265 students, as measured by an index combining the scores from these two competitions, qualify for the USAMO. This year, over 37,000 students began the process by taking the AMC 12. Thus, the USAMO qualifiers represent the top ≈0.7% of that initial pool of contestants.

I mention that just to set up this interesting fact: AI systems reliably qualify for the USAMO, doing very well on the AMC 12 and AIME; but they bomb the USAMO itself.

An analysis from the folks at MathArena showed that the best math AI systems score what I would call “approximately 0” on the 2025 USAMO.

Incidentally, this is terrific work from the MathArena team. Evaluating open-ended output like proofs is hard, but it’s critical for understanding AI capabilities. I’m glad to see folks just biting the bullet and doing it. Read the paper for the full methodology and lots of good observations.

Is there anything to make of this abrupt drop-off from the AIME to the USAMO? The simple read is that it just reflects current capabilities. After all, it’s not unusual for humans to get a 0 on the USAMO. In 2023 and 2024, 8% and 5% of contestants, respectively, scored 0 total. Is that just where AI systems are too?

I’m not so sure. As much as I caution against over-extrapolating from AI performance on the AIME, my sense is that the degree of mathematical capability exhibited by the best AI systems should translate to better scores on the USAMO. Also, the human stats might not really “excuse” the AI performance. Some of those human 0s reflect people having a bad day: getting sick, choking under pressure, etc. Some of them reflect humans who just squeaked by the qualification threshold but are really out of their depth. The former shouldn’t affect AI systems at all, and the latter doesn’t fit with their reliably good AIME performance.

So I think there might be something more to explain here. What else could be at play? Apart from difficulty, the most salient difference between the AIME and the USAMO is the solution format: short-form answers vs. proofs.

As I understand it, short-form answers are at the core of how “reasoning” models are trained. My cartoon-level model of this training process is you start with a pre-trained LLM and then do reinforcement learning (RL) using a binary reward signal. “Binary reward signal” means you can easily tell if the model is getting the right or wrong answer on some task. This is readily doable for math problems with short-form answers, and pretty challenging to do for problems with proof-based solutions.1 So maybe we should generally expect underperformance on proof-based problems.

But that’s not to say there’s nothing there. I’ve previously written about how the top-of-the-line models, like o3-mini-high, don’t seem so good at proofs, at least in my own nonsystematic exploration. But, from that same exploration, I would still say they’re much better at proofs than the prior generation of models, like GPT-4o. So we probably shouldn’t conclude that these models are massively over-fit to problems with short-form answers. There are some positive spillover effects, despite, as far as I know, training on proofs not being the main mechanism of improvement.

In trying to decide what to think, I found it helpful to recall what I thought of LLMs’ reasoning abilities before the first reasoning model came out. For GPT-4o, I had the sense that it could often handle individual steps of math problems correctly in isolation, but that inaccuracies would creep in too quickly for it to solve more complex problems. It had a lot of the right “raw material”, it was just undisciplined, so to speak, when it tried to put things together. Then, when o1 came out, it seemed easy to explain what had happened in these terms: OpenAI had gotten it to “focus better” at putting the right elements together to get the right answer.2

TL;DR: The thesis of this post is that a model like o3-mini-high has a lot of the right raw material for writing proofs, but it hasn’t yet been taught to focus on putting everything together. This doesn’t silence the drum I’ve been beating about these models lacking creativity, but I don’t think the low performance on the USAMO is entirely a reflection of this phenomenon. I would predict that “the next iteration” of reasoning models, roughly meaning some combination of scale-up and training directly on proofs, would get a decent score on the USAMO. I’d predict something in the 14-28 point range, i.e. having a shot at all but the hardest problems.

Will that mean anything? I wouldn’t necessarily count on it, i.e., it will more reflect “catching up” on abilities the model almost already has. In part I’m registering this prediction just so we don’t have to be shocked if this happens. That said, it will be interesting to see what spillover effects getting better at proofs has on other tasks.

If this idea is correct, it should be possible to “coax” o3-mini-high to valid USAMO solutions without giving away too much. The rest of this post describes my attempts to do just that, using the three problems from Day 1 of the 2025 USAMO.3 On the easiest problem, P1, I get it to a valid proof just by drawing its attention to weaknesses in its argument. On the next-hardest problem, P2, I get it to a valid proof by giving it two ideas that, while substantial, don’t seem like big creative leaps. On the hardest problem, P3, I had to give it all the big ideas for it to make any progress on its own.

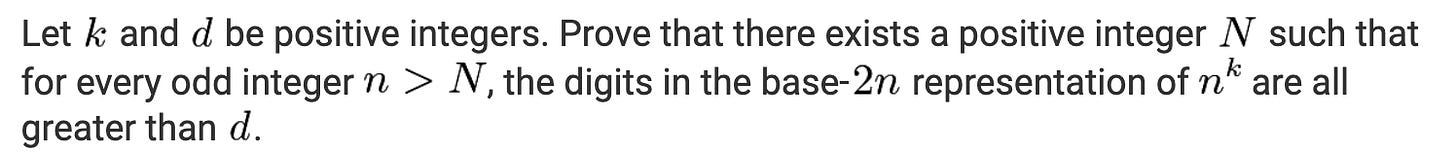

P1

This problem statement takes a moment to unpack. It’s saying, take any k and write nᵏ in base 2n. Then, as n (odd) grows, prove that: eventually all the digits in the base-2n representation are at least 1: no 0s show up again; also, eventually all the digits are at least 2: no 1s show up again; and so on.

What follows is a detailed account of my attempts to get a proof out of o3-mini-high. Feel free to skim: you can certainly get a sense of what happened without reading any of its output, just my comments. If you lose steam, skip to the Discussion section.

When given this problem with no additional prompt, o3-mini-high took a straightforward approach: it wrote out the base-2n representation of nᵏ and tried to bound each digit below by something that grows monotonically with n. It correctly showed that there would be k digits in base-2n, and that the left-most digit would grow on the order of n.

I spotted two issues with the proof.

First, it didn’t make use of n being odd. That’s odd (sorry) because we wouldn’t expect the problem to have extraneous premises. Maybe, long shot, o3-mini-high had correctly proven a more general statement? I asked if the proof relied on n being odd, and it said yes, but it didn’t give a good justification. Beyond being logically wrong, this also just misses one of the genre conventions: a proof should use each premise or else mention if a premise is unnecessary. Counterintuitively, I took this as a positive sign: the details of what makes a valid proof hadn’t been drilled into it, so in a sense its errors might just be for lack of trying.

More substantively, though, its lower bound for the other digits was just plain wrong. Here aᵢ is the iᵗʰ base-2n digit of nᵏ.

The claim about the growth rate of the final sum is incorrect. The power of n coming from the j=k-1 term is clearly at least k-1-i, but the coefficient aₖ₋₁ of that term can also contribute an additional power. In fact, the model’s proof had previously shown that aₖ₋₁ must grow more-or-less linearly with n. So the sum grows with order k-i, and that sinks the entire proof.

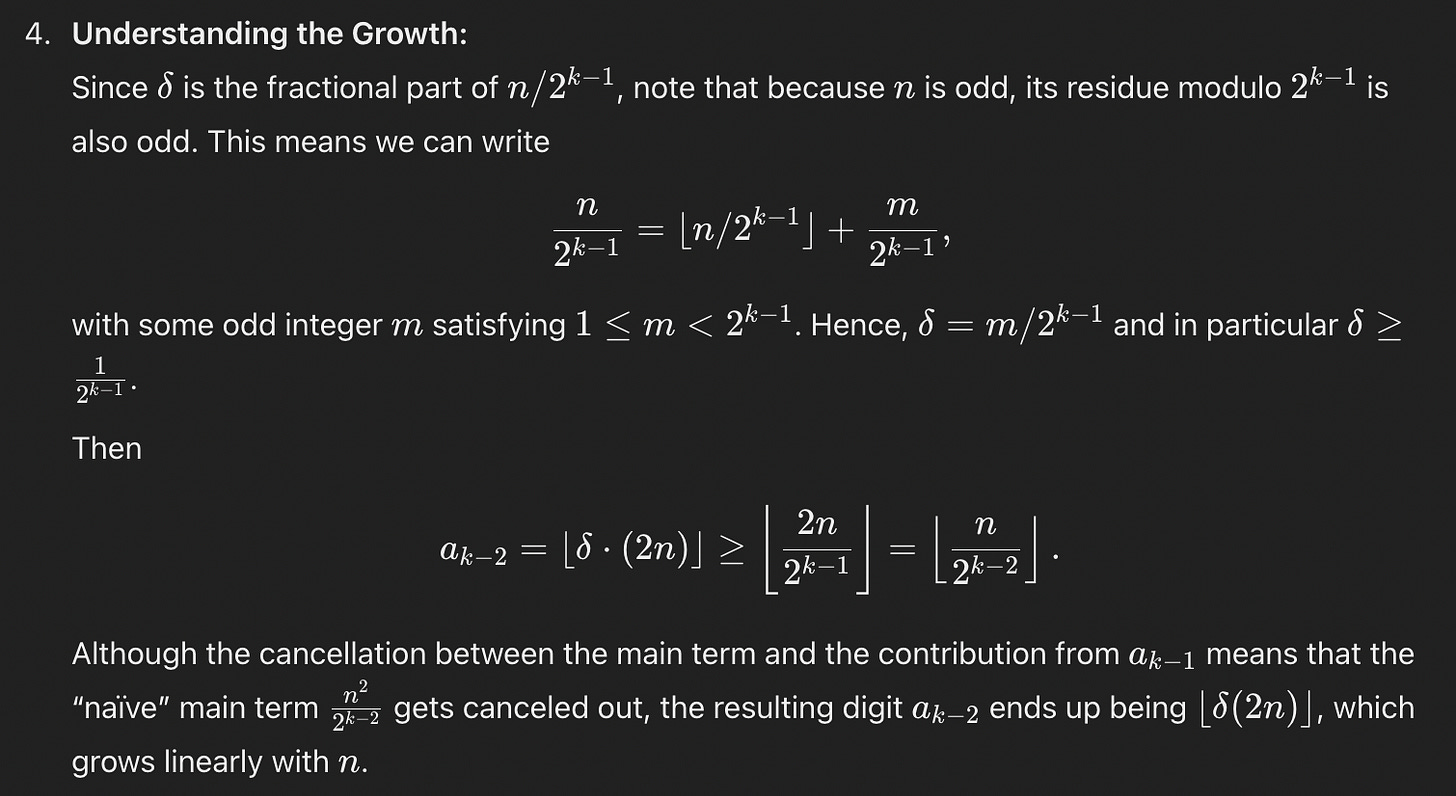

I tried to draw its attention to this. I asked it to look at the case of i = k-2, where I hoped the issue would be most obvious. Not only did it implicitly acknowledge that it was wrong, but it correctly used the premise that n is odd, and, most importantly, came up with a valid argument for this case. Not too bad!

This isn’t a complete proof yet, but it’s on a good track. The logic is right, and seems like it could generalize. I tried asking it to generalize this argument beyond k-2 right away, but it just fell back on the incorrect asymptotic claim. So, instead, I asked it to work out the k-3 and k-4 cases, to try to build up its sense of the general pattern. We know it likes doing that sort of thing when solving problems with short-form answers, but it doesn’t seem inclined to try the same strategies for proofs — again, perhaps because it hasn’t been trained on proofs.

It got both of these right. Here’s the k-4 case.

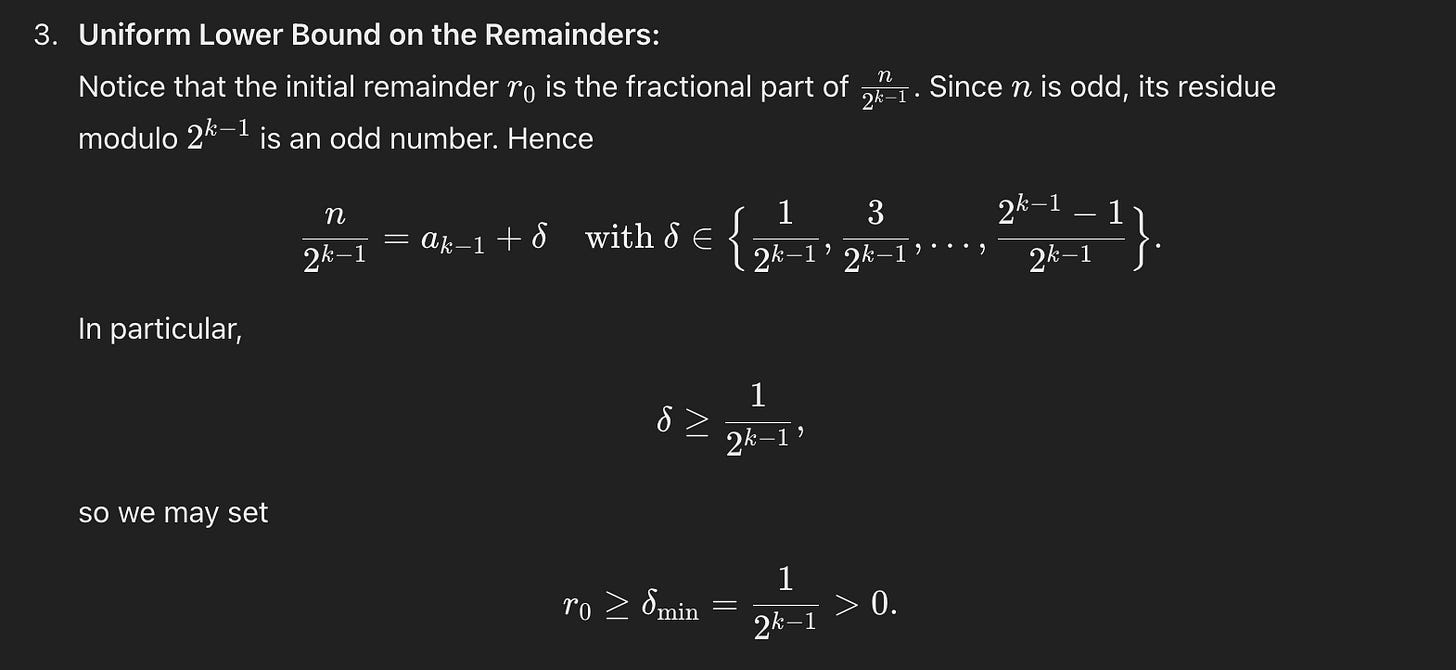

At this point I’m basically convinced the approach generalizes to all digits. Really that’s all a proof is, so you could be a bit cheeky here and say “and so on, QED” and that might be good enough. Better still to sum this up in general terms. So I asked it to generalize. Here’s what it said.

(Confusing to use {} both for a sequence and for the fractional part, but whatever.)

This does look like the right generalization, but I thought it needed to better justify why aₖ₋ₗ = ⌊2nrₗ₋₁⌋, so I asked it to expand on that. And actually, I think maybe that wasn’t necessary: it really is just the division algorithm:

So, I think we’re basically done.

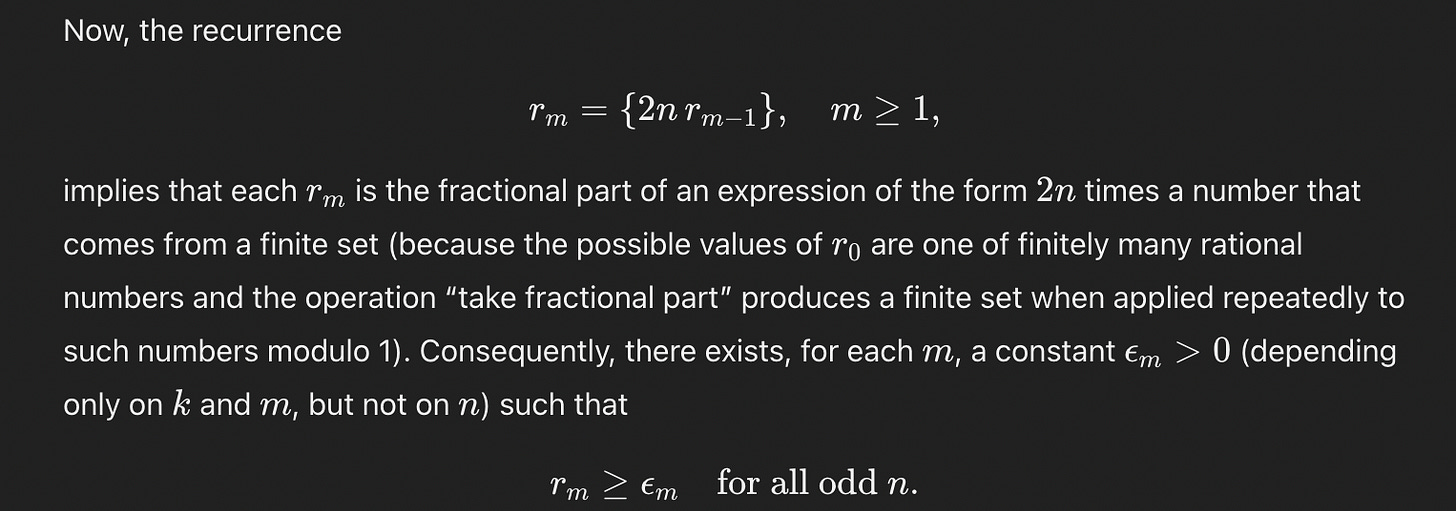

Final Proof

I asked it to sum up all of its work in a single, self-contained proof.

Modulo “maybe the model snuck some bullshit past me” — which is totally, sadly possible — I think this is a perfectly valid proof, and would get 7/7 on the USAMO. If you’re curious, or motivated to try to find an error, here‘s the full chat.

Discussion

Of course, it only got this proof with my prompting. Even so, I would say it still did most of the heavy lifting. My questions were all “playing dumb”: I didn’t supply any new ideas, I just drew its attention to the steps in its argument that I believed were wrong or weak. In fact, I think I played a role similar to what we see it do on its own in its chain of thought: repeatedly asking, “Wait, is that right?” So while it clearly needs assistance to do this today, I don’t think the capability is far beyond it. Heck, I’d even be curious what happens if you just let it think for longer — a parameter we can’t control, beyond choosing “high” reasoning mode.

The proof also isn’t so insightful about “what’s really going on”. I’ve discussed how these models often take the “low road” to solve problems, and this feels like a low road proof. Given how much of a slog it was to get there, I was actually pleasantly surprised that the summary was as concise as it was. It’s moderately similar to this (ostensibly) human-authored solution. But I think better solutions give more insight. For instance, here’s Evan Chen’s solution.

This is much more concise, and shows that what we’re “really” doing is taking the remainder upon division by powers of 2n, which amounts to inverting powers of n mod powers of 2. You can see that’s more-or-less what’s happening in o3-mini-high’s solution as well, just with less up-front clarity. Also, this proof establishes a specific value of N that works, which o3-mini-high doesn’t do, though it seems like it should be straightforward and natural to do. Another violation of the genre rules, maybe.

All in all, assuming it didn’t pull one over on me, I’d rate this problem as “just out of reach” for o3-mini-high.

P2

Note: It’s smoother sailing from here, just because I couldn’t get o3-mini-high to make as much progress, so there will be less intermediate output to wade through.

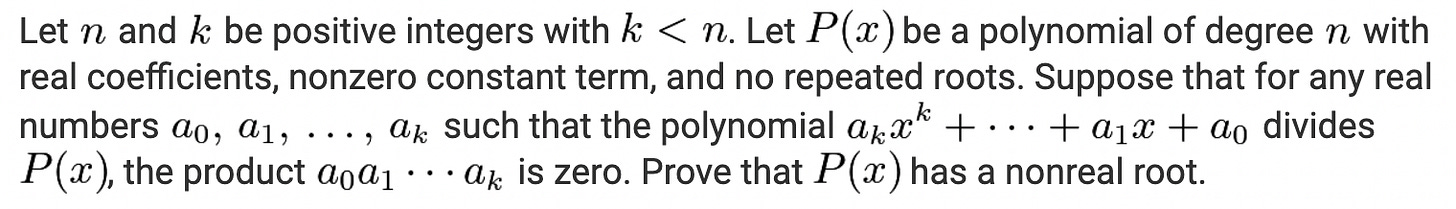

This too takes a moment to unpack. It’s saying, suppose for the given P that there is an integer k such that all degree-k divisors of P have at least one coefficient that is 0. Prove that P can’t have all real roots.

Looking through human solutions, the most common solution (including Chen’s) proceeds by contradiction in three steps.

Note that, WLOG, you can assume k=n-1.

Prove that some divisor Q of P has two consecutive zero coefficients.

Show that this means Q cannot have all real roots — contradiction.

o3-mini-high doesn’t seem able to get (1) on its own. After it was clear it wouldn’t come up with (1) as part of a full solution, I tried asking it just for all the potentially-helpful WLOG statements it could think of, over and over. Still no luck.

Without (1), it doesn’t make much progress on (2). But, if I rephrase the problem with k=n-1, it does make progress: it only takes one “wait, is that right” question to get it to an argument that implies (2). It gets stuck again here, consistently bluffing that it’s actually done. The best I could do was suggest that it prove (3) — which it did. With (3) in hand, it wrapped up the problem correctly.

Here’s its final proof, with k=n-1 in the problem statement and “the lemma” referring to (3), i.e. the statement that a polynomial with real coefficients and all real, nonzero roots cannot have two consecutive coefficients equal to zero.

The full chat is here.

In other words, it needs a big hint for (1) and (3). I don’t want to understate the challenge of coming up with (1), or, having proven (2), thinking to try for (3). Looking through the human solutions, there are people who got the rest of the solution but missed precisely these parts.

A human missing (1):

A human missing (3):

But I do think these steps aren’t too unmotivated, as the human comments indicate. For (1), you’re looking for a pigeonhole-style argument one way or another, and this is a helpful way to get one. For (3), there’s at least one natural path to follow — in fact, the same one o3-mini-high follows — where you end up reducing the problem to the case where a divisor of P has two adjacent non-zero coefficients. It’s natural, then, to see if you can do anything with that. And, for what it’s worth, (3) on its own has been written up before.

Will the next round of improvements allow it to come up with these ideas on its own? I’m not sure, but I lean toward yes. I think reasoning steps like these are pretty common. So, even though o3-mini-high seems quite far from getting this proof right now, I think that distance may not be so hard to cover.

P3

It seems quaint now that I said P1 and P2 took “a moment” to unpack. P3 has one of the most intimidating problem statements I’ve ever seen. I think the best way to internalize it is to just read the solution.

So: the answer is that Alice can win by choosing S to be the points outside the circle with diameter PQ. The idea is that this turns the similar triangle condition into the easier-to-work-with condition that cities A and B are connected precisely when no other city lies within the circle with diameter AB. Another way to put this condition is that A and B are connected if and only if, for every other city C, ∠ACB < 90°. From there, the proof that the resulting road network is (i) connected and (ii) planar isn’t so bad. Here’s one I found easy to follow.

How do you get to this construction? One person had this to say:

That’s one ray of hope: the construction of S as the points outside the circle with diameter PQ isn’t that complicated, and once you start playing with it, you realize it fits into the problem nicely. The other bit of hope, for an AI system anyway, is that there’s some prior art here: the road network is an example of an object called a Gabriel graph, which has been studied extensively.

I couldn’t coax o3-mini-high to do much here. It starts out convinced that Bob wins. It didn’t start making progress until I gave it the right answer, the right construction, and the observation that this means A and B are connected if and only if, for every other city C, ∠ACB < 90°. Even then, it only proved planarity, and I couldn’t get it to prove connectivity. It seemed inclined to make an argument that only works if Bob places finitely many cities. Here’s one example chat. I admit I wasn’t trying too hard at this point, so, as they say, exercise for the reader! Maybe you can do better.

This problem requires more creativity than the prior two. Even so, I’m not even sure I’d say it requires a lot of creativity. Hitting on the construction seems like the main challenge, but the construction itself has a concise description. There are some plausible paths there too, especially if your background knowledge includes Gabriel graphs. But o3-mini-high doesn’t seem close yet, and I’d only give the next iteration an outside chance of getting this problem.

Conclusion

We can think of solutions to problems as sitting in a high-dimensional search space. We can think of algorithms (like o3-mini-high) as exploring some subset of that search space. For any given problem, the algorithm may be closer to or farther from finding the right solution.

For proof-based solutions, I also think it’s helpful to think of individual reasoning steps as sitting in the search space, and an algorithm may be closer or farther from finding these individual steps. Intuitively, if it’s close to finding the individual steps, it’s probably not too far from finding the full solution.

Even though o3-mini-high can’t find full solutions to any USAMO problems, my explorations here lead me to think that it’s pretty close to finding a lot of the individual steps needed to solve easy-to-medium USAMO problems. I think it’s reasonably likely that the next iteration of these models will solve these problems.

There is no easy way to automatically judge whether a natural-language proof is correct. Correct proofs can vary substantially from each other, since there are often many different ways to prove the same statement. Incorrect proofs can be very superficially similar to correct ones, just misstating or omitting a single key detail.

As mentioned, the technique to use was apparently RL. The mechanism by which the model improved was, apparently, extending its answers, i.e. using a longer chain of thought.

USAMO problems increase in difficulty within a given day: P1 < P2 < P3 and P4 < P5 < P6. Correspondingly-ordered problems are generally comparable: P1≈P4, P2≈P5, and P3≈P6. This is only a rough guideline, with moderate year-to-year variation. I focused on Day 1 just because this for brevity. A brief investigation of the Day 2 problems led me to believe that similar conclusions would hold overall.

Foolishness Index⭐⭐⭐ o1 pro To trip on flat ground

Given a thin, long water pipe as a water source, you have three unmarked water cups with capacities of 5 liters, 6 liters, and 7 liters. You can aim the water pipe at the opening of a cup and press a switch to fill it. Special Note: If you pour out the water from a cup (emptying it completely, as if pouring it on the ground, because you cannot return water to the source, which is a thin pipe), it will be considered waste. How can you obtain exactly 8 liters of water using these 3 cups while minimizing water waste?

https://chatgpt.com/share/67570ef1-166c-8010-9970-62f37aadf497

***************************************************************************************

You have a water reservoir with abundant water and three unmarked water jugs with known capacities of 5 liters, 6 liters, and 7 liters. The machine will only fill a completely empty jug when you place it inside. Special Note: You can empty a jug by pouring its contents into another jug, but if you pour water out without transferring it to another jug, as if pouring it on the ground,it will be considered "waste". How can you obtain exactly 8 liters of water using these 3 jugs while minimizing water waste?

https://chatgpt.com/share/67570e96-1d9c-8010-bfc3-afaf609d010c

Foolishness Index⭐⭐ Can llm reason?

You have 11 balls. One of them is counterfeit and is either lighter or heavier than the others, which are all genuine and have the same weight. You have a balance scale, which can only compare the weights of the two sides. Your goal is to identify the counterfeit ball and determine whether it is lighter or heavier, using the fewest possible weighings.

However, there are additional constraints:

* **Initial `p` value:** `p` starts at 0.

* **`p` increment:** Each ball that has been placed on the scale *at least once before* will increment the counter `p` by 1 *each time* it is placed on the scale again.

* Example: If ball #1 and ball #2 have each been weighed once previously, placing *both* of them on the scale again will increase `p` by 2.

* **`p` Limit:** The value of `p` can increase to a maximum of 1.

* **Minimize weighings:** The number of weighings must be minimized