To Think Deeply About Simple Things

Gemini 2.5 Pro Deep Think solved a medium-difficulty USAMO problem

Programming note: I recently joined Epoch AI as a researcher. The pace of publishing here at Lemmata will likely slow, though I hope to keep it bounded above zero. Of course, I’ll also be writing about general AI topics for Epoch! For starters, I recently wrote about a popular science benchmark. Follow along there if you’re interested.

Fate keeps bringing me back to Problem 2 from the 2025 USAMO, hereafter “P2”.

In March, I tried to coax USAMO proofs out of o3-mini-high. My conclusion for P2 was that o3-mini-high had some of the building blocks but needed some substantial hints. Also see that article for general background on the USAMO.

I returned in May, having grown perplexed as to why the next iteration of models (Gemini 2.5 Pro, o3/o4-mini-high) couldn’t ever seem to get the very first step of the proof most commonly found by humans, hereafter “the human proof”. My best guess was that this step, being relatively abstract, isn’t easy to learn when training on problems with short-form answers.

Now Google has announced that Gemini 2.5 Pro’s forthcoming “Deep Think” mode has solved this problem. This is a milestone! It’s the first medium-difficulty USAMO problem solved by an LLM. But it’s still perplexing: Deep Think’s proof doesn’t include the key step I spent a whole post discussing!

Who would have guessed that this problem—unremarkable by human standards—would be such a fruitful object of analysis regarding AI.

I’ll do three things in this post: review the problem and the human proof’s first step; review Deep Think’s proof; and, speculate about what it all means.

TL;DR: Maybe we can say that Deep Think is struggling with a general skill of encapsulation. The first step of the human proof is not only a little more abstract, but also a little more self-contained. Deep Think’s proof is less encapsulated: the “chunks” are bigger. The proofs are logically equivalent of course, but perhaps the chunkier approach scales less well. Maybe this helps explain why LLMs seem to struggle to create whole proofs, even as they show increasing ability to solve individual steps when directed by mathematicians.

P2 Refresher

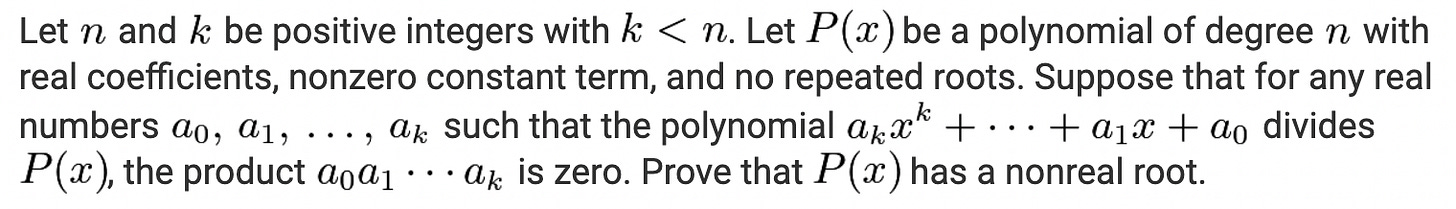

For the third time now, here’s the problem:

In other words, suppose that all degree-k divisors of P have at least one coefficient that is 0. Prove that P can’t have all real roots.

The human solution proceeds by contradiction, i.e. assume P has all real roots. From there, the proof follows this outline:

Note that, WLOG, you can assume n=k+1.

Show that two degree-k factors of P must have the same zero coefficient.

Use that to find a divisor of P that has two consecutive zero coefficients.

Show that this new divisor cannot have all real roots — contradiction.

For a full proof that follows this outline, see here. (1) is the step I wrote about before. Here’s how one human solution presents it.

Assume for the sake of contradiction that P has n real roots. If n>k+1, we just need to consider a subset of these roots in the n=k+1 case to get our contradiction. Thus assume n=k+1.

I elaborated on this a bit:

For clarity, I’ll spell this out a bit more. Suppose we have proven the n=k+1 case: if all degree-(n-1) divisors of P have at least one coefficient that is 0, then P has a nonreal root. Now consider a smaller k. Assume for the sake of contradiction that P has all real roots. Form a degree-(k+1) polynomial with k+1 of these roots; call it Q. Note that Q satisfies the same conditions as P: real coefficients, nonzero constant term, and no repeated roots. Also, any degree-k divisor of Q is a degree-k divisor of P. So, by the problem’s supposition, such a divisor must have a zero coefficient. But, by the supposition that we’ve proven the theorem for the n=k+1 case, this all implies that Q has a nonreal root. Since Q’s roots are a subset of P’s roots, this implies that P has a nonreal root: contradiction. Thus, we only need to prove the n=k+1 case.

I hope that verbosity doesn’t obscure the simplicity of the idea: it’s not doing anything clever, it’s just noting that the conditions of the problem are all “preserved”, so to speak, by looking at divisors of P of any degree > k, and thus we can fix n to have any such value. It may not be obvious why this observation would be useful, but it’s nonetheless the sort of thing I think you’d find if you played around with the problem for a while. Also, it just feels very standard: it’s a kind of observation you’d see made all the time in an algebra or analysis class.

The utility of (1) is that it lets you get (2) from a straightforward application of the Pigeonhole Principle. The meat of the problem is going from (2) through (3) and (4).

Deep Think’s Version

The full proof is available here. To give an accurate, if cheeky, outline:

A more complicated, combined version of (1) and (2) from the human proof

See above

Same as the human proof

Same as the human proof

I’ll now go over what it does for (1)/(2), rewritten a bit to avoid notational complexity that we don’t need when looking at just this part.

Consider a set of k+1 roots of P, call them s₁, …, sₖ₊₁. Consider the k+1 subsets of size k from among these roots: this gives us k+1 divisors of P, each of degree k. So, the hypothesis of the problem applies: each of these divisors must have a zero coefficient. This zero can’t be the coefficient of xᵏ, and it can’t be the constant coefficient either, because 0 is not a root of P. So, these zero coefficients must be among the k-1 terms xᵏ⁻¹, …, x.

The easiest next step would be just to say that, since k+1 zero coefficients are distributed among k-1 terms, there must be two such divisors with the same zero term.1 That is precisely (2) from the human step, and it’s all that the rest of Deep Think’s proof actually depends on.

Deep Think does something a little more complicated. Let Q₁, …, Qₖ₊₁ be the divisors of P under consideration here. Let Aₘ be the set of integers i such that Qᵢ has a zero coefficient at xᵐ, where m is in 1, …, k-1. Since each Qᵢ has some zero coefficient, the union over m of Aₘ is all of 1, …, k+1. But there are only k-1 sets Aₘ, so if we can show that each Aₘ has at most one element, then we have a contradiction.

In other words, we’re done if we can show that having two of these divisors with the same zero term leads to a contradiction. At the end, we’re at the same place as in the human proof, after (2), but we took a somewhat different route.

Think Smarter, Not Deeper

What do we make of these differences? Loosely speaking, the human proof looks a bit like this to me, where 1, 2, 3, 4 are the steps above, and P is the desired result:

In contrast, Deep Think’s proof looks a bit more like this:

It’s easier to get started with the first form: you just need (1), and from (1) I think it’s a small leap to (2). But, if you can’t find (1) on its own, and instead have to roll (1) and (2) together, it seems plausible that it would be harder to get started.

This encapsulation of (1) into its own step is a critical skill in doing mathematics: it’s much easier to chip away at smaller problems than to have to crack bigger problems all at once. More broadly, planning the overall approach is a key part of any larger research project. It doesn’t seem like the models today are doing much planning. Instead, they just have to hope they can find the right chunks within the space of things they can possibly find.2 And, apparently, (1) is still not something they can find.

So, I wonder if what we see is Deep Think working harder, but not smarter. It’s capable of finding bigger chunks, but that’s still a pretty brute-force approach.3

Maybe the path to scaling runs through finding more efficient ways of reasoning, including things like encapsulation, hierarchy, and abstraction. I don’t have any reason to think this is out of bounds for AI systems, though it’s plausible that it might be a harder skill to learn. It’s also at least compatible with my earlier hypothesis that the skills required to find step (1) on its own might not readily emerge from solving problems with short-form answers.

In any case, it will be interesting to see if this ability begins to emerge.

You can of course get something a little stronger: either two pairs of such matching-zero-coefficient divisors, or a triple of divisors with the same zero coefficient. But the proof only needs a single pair.

This perspective is probably influenced by my having recently watched this talk by mathematician Timothy Gowers, about his opinions on why LLMs aren’t better at proofs. One idea he discusses is that LLMs approach problems with “trial and error”. They try a strategy, see if it works, and, if not, give up and try something else. Human mathematicians, Gowers proposes, will do more to tweak their initial failures and thus climb toward a full solution. This sounds like the test-time training from AlphaProof, but I agree it doesn’t match what LLMs seem to be doing.

We don’t know how Deep Think works. If it’s as simple as sampling many times and then picking what it thinks is the best one, then we might expect a relative lack of emergent abilities, just “more of the same”. I think this is compatible with what we see in this one example, anyway.