The Inferences We Make Along the Way

AI systems seem to lack some basics when it comes to solving proof-based problems

Note: this post discusses proofs only in the sense of informal, natural language proofs. For a discussion of AI systems that generate formal proofs, see here.

This post explores two related topics. First, I’ll look at a challenging math problem that suggests one limit in current AI systems’ math capabilities: they’re surprisingly bad at some relatively standard reasoning steps involved in solving proof-based problems. Second, given that, I’ll ask how we might improve this deficiency. I’ll review a bit of prior work here, and discuss some possible future directions. I certainly don’t know how to solve this problem, but it’s fun to think about.

Boundary Conditions

What is the current frontier of LLM-based AI systems’ math capabilities? There are various abstract answers to this. For instance, I’ve proposed that they lack creative problem-solving abilities. But, for the sake of getting a concrete answer, we can formulate a more concrete question: what are the easiest-for-humans problems that these systems can’t solve?

I’ll discuss such a problem in this section. It comes from an elite US high school math competition: the USAMO. For background on the USAMO, see here. I’ll just mention that the USAMO consists of six problems: two easy, two medium, and two hard.1 Recently-released systems (Gemini 2.5 Pro, o3/o4-mini-high) solved the two easier problems on the 2025 competition. However, no system has solved the medium or hard problems, or even gotten much partial credit toward doing so. So, we’ll look at one of the medium-difficulty ones. Here it is.

In other words, suppose that all degree-k divisors of P have at least one coefficient that is 0. Prove that P can’t have all real roots.

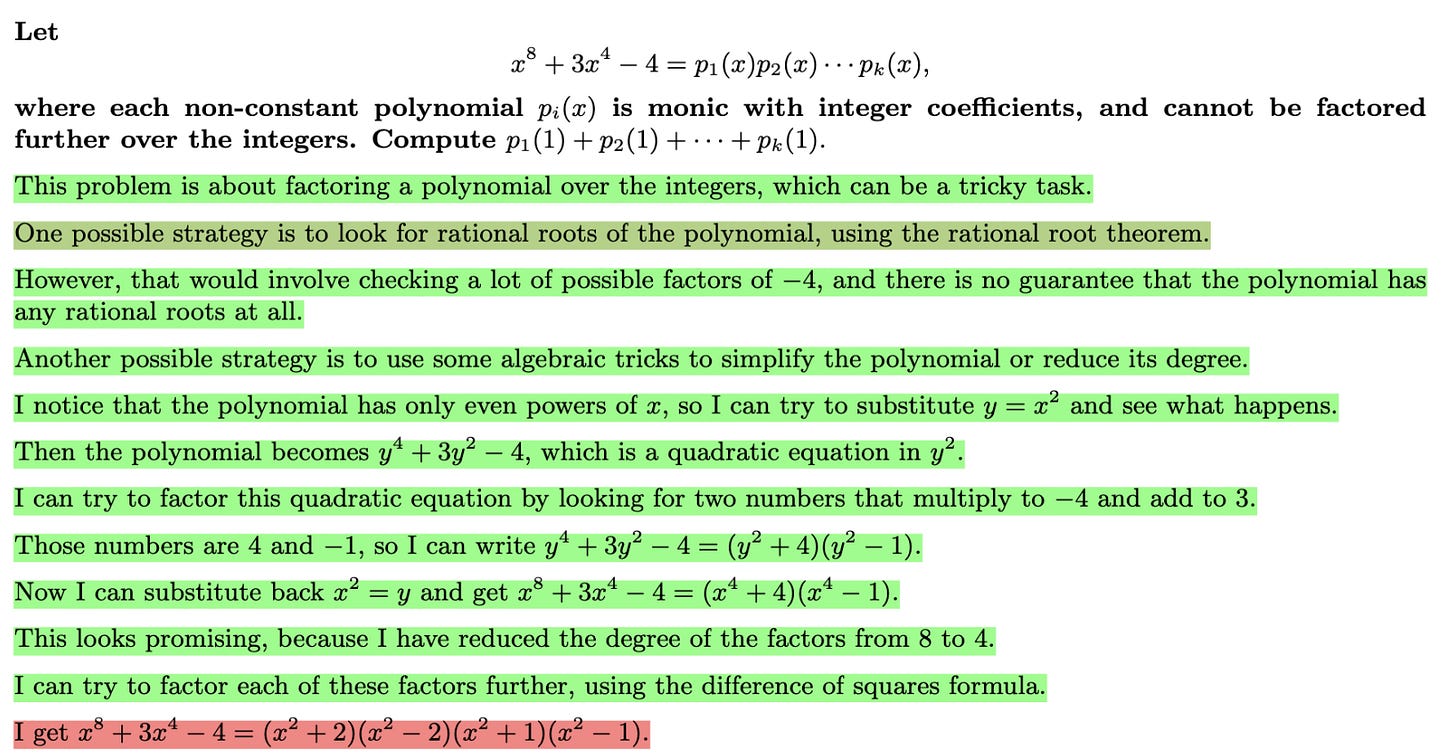

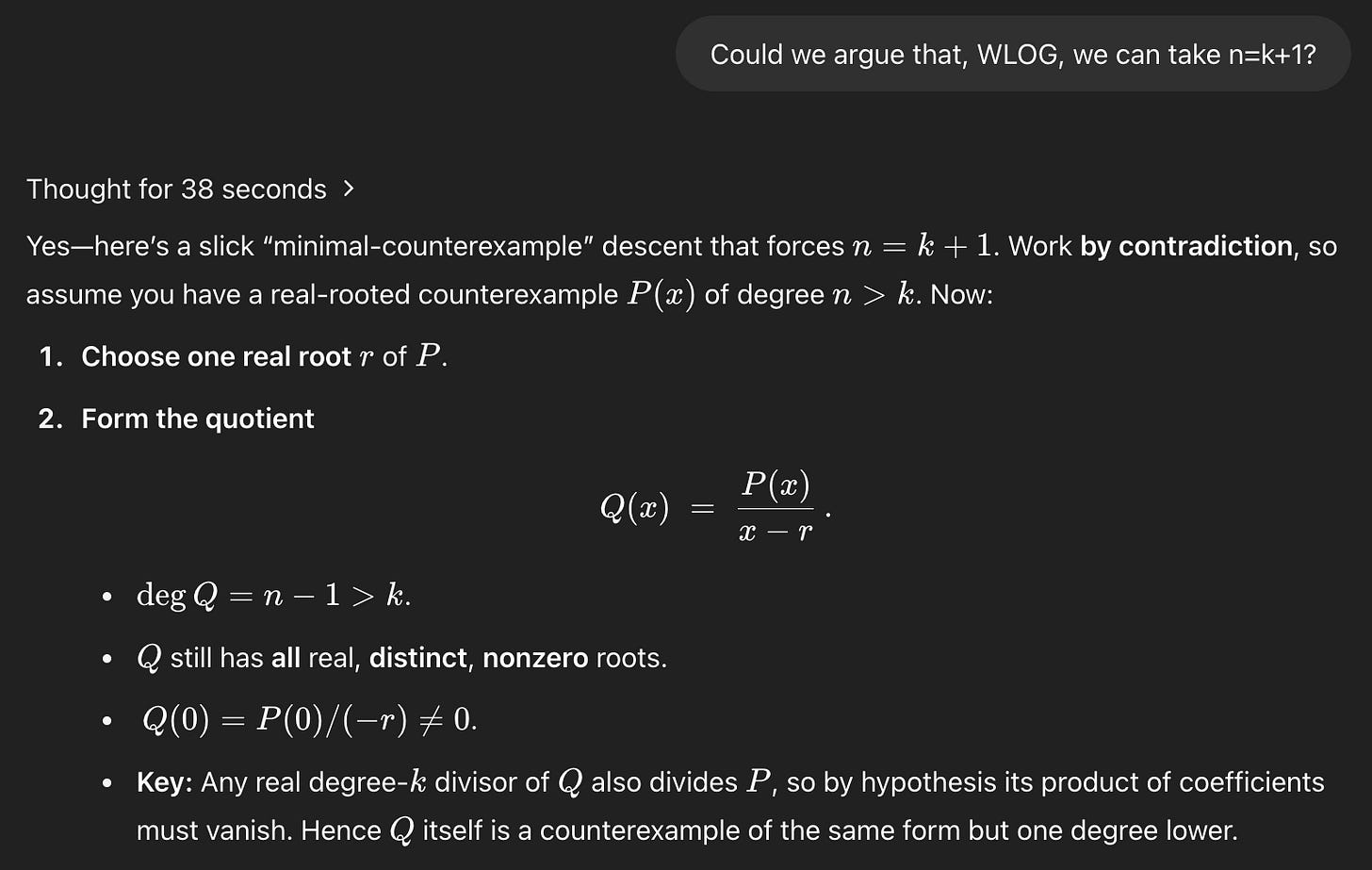

I’ve discussed the entire problem before, including the solution most commonly found by humans. Here I just want to focus on the first step of that solution: without loss of generality, we can take n=k+1.

Most solutions don’t even justify this step too deeply, saying things like:

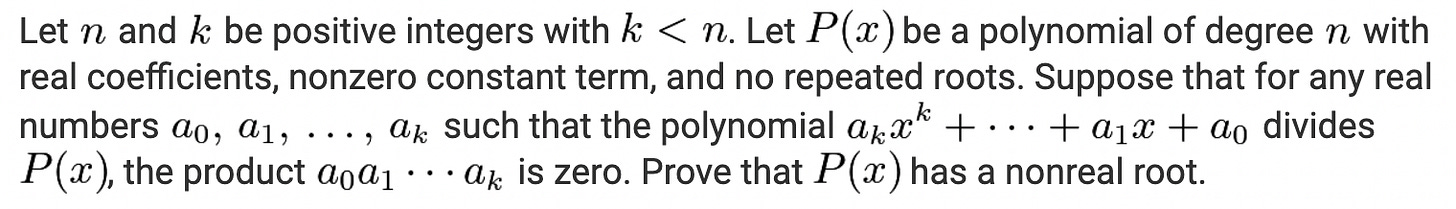

Assume for the sake of contradiction that P has n real roots. If n>k+1, we just need to consider a subset of these roots in the n=k+1 case to get our contradiction. Thus assume n=k+1.

For clarity, I’ll spell this out a bit more. Suppose we have proven the n=k+1 case: if all degree-(n-1) divisors of P have at least one coefficient that is 0, then P has a nonreal root. Now consider a smaller k. Assume for the sake of contradiction that P has all real roots. Form a degree-(k+1) polynomial with k+1 of these roots; call it Q. Note that Q satisfies the same conditions as P: real coefficients, nonzero constant term, and no repeated roots. Also, any degree-k divisor of Q is a degree-k divisor of P. So, by the problem’s supposition, such a divisor must have a zero coefficient. But, by the supposition that we’ve proven the theorem for the n=k+1 case, this all implies that Q has a nonreal root. Since Q’s roots are a subset of P’s roots, this implies that P has a nonreal root: contradiction. Thus, we only need to prove the n=k+1 case.

I hope that verbosity doesn’t obscure the simplicity of the idea: it’s not doing anything clever, it’s just noting that the conditions of the problem are all “preserved”, so to speak, by looking at divisors of P of any degree > k, and thus we can fix n to have any such value. It may not be obvious why this observation would be useful, but it’s nonetheless the sort of thing I think you’d find if you played around with the problem for a while. Also, it just feels very standard: it’s a kind of observation you’d see made all the time in an algebra or analysis class.

The harder parts of the problem come later and I won’t go into them, but to give you a sense of how this is a relatively small step, here’s the full solution that I quoted from above, with the quoted part highlighted. This step isn’t most of the work!

So, here’s the punchline: I just cannot get an AI system to make this step. None of the models on MathArena get it. I’ve sampled Gemini 2.5 Pro and o4-mini-high dozens more times: nothing.

Maybe they consider it, at least to some degree, but discard it because they can’t figure out how to use it? As I said, the rest of the problem is harder, and it’s not obvious that this step is useful on its own. So, I tried just asking Gemini 2.5 Pro and o3/o4-mini-high to make initial observations about the problem, using this prompt:

Below is a math problem. Your task is to make as many observations about the problem as possible without necessarily proposing a full solution. You may try to solve it, if that is helpful, but that is NOT your primary task. Instead, you are trying to accumulate as many *initial results* as possible: observations that may be useful in constructing a full solution, which we will do later. These observations might come in the form of lemmas or problem reductions. At this stage prioritize quantity and cast a wide net: it is fine if many or even most of these ideas turn out not to be ultimately useful.

Problem: […]

Now generate as many observations, lemmas, reductions, etc. about this problem as you can.

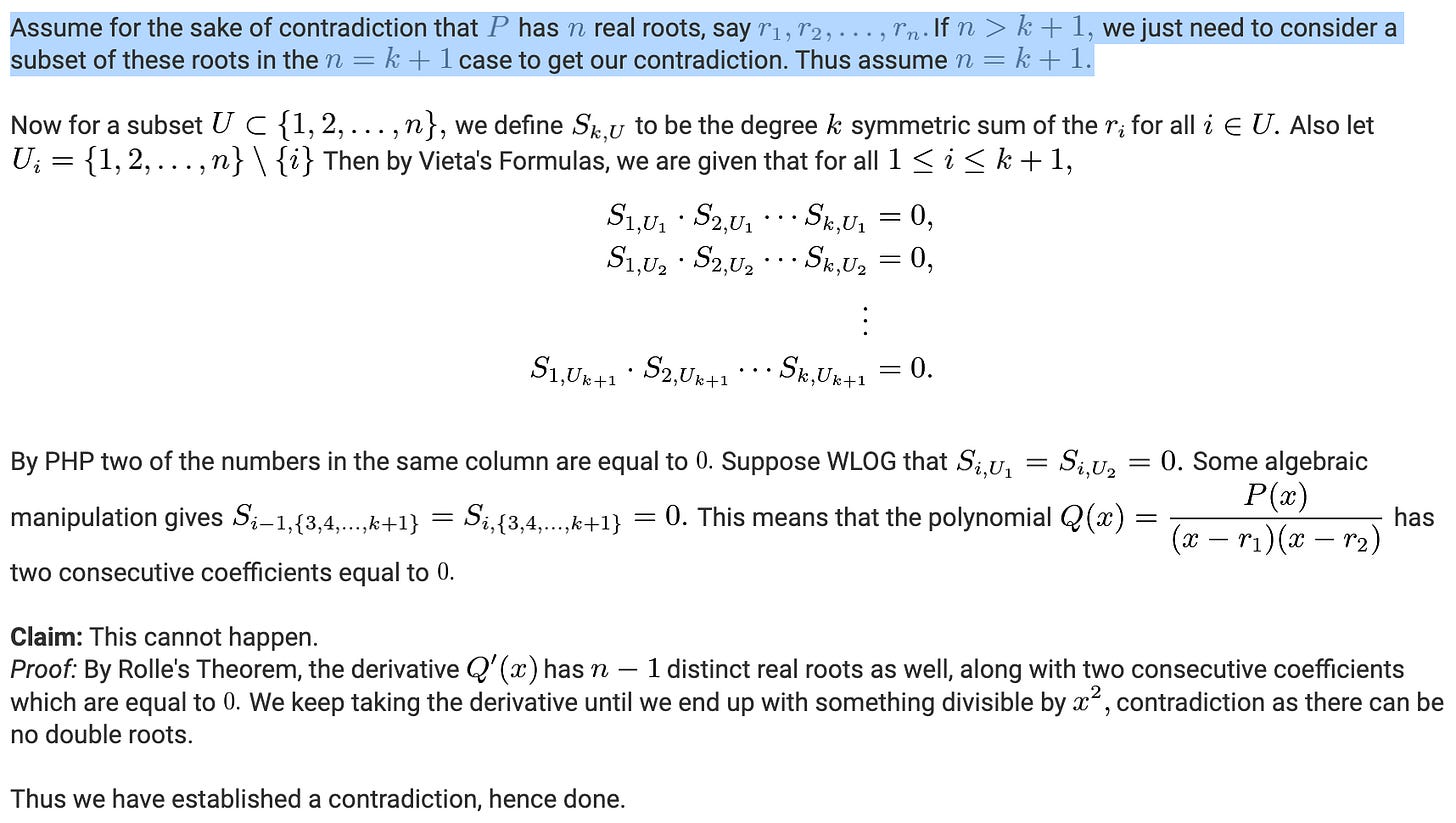

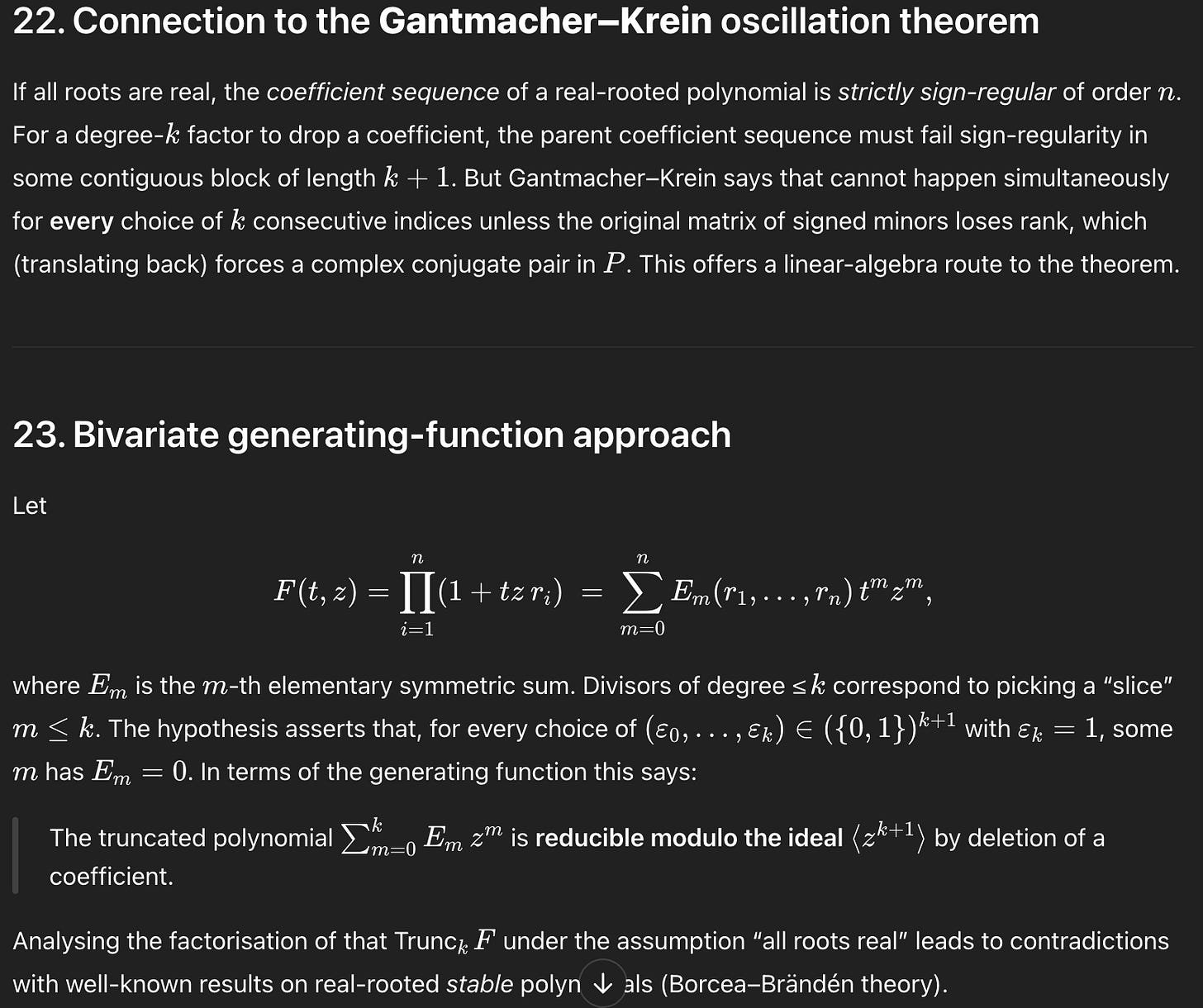

The models come up with lots of ideas, but never the one we’re looking for. In terms of concrete observations, they seem to run out of steam pretty quickly and then switch to approaches relying more on background knowledge. Here’s a fairly representative sample of the output:

At least on competition math problems, I’ve come to expect LLM-based systems to shine when they just need to apply familiar approaches, and I think this counts as that. But, apparently, something about this step is difficult for them.

Why is this so hard?

One possibility is that I’m misinterpreting things. For one, I could be underestimating the difficulty of this step. Maybe you really have to go much deeper into the problem before you would even think to try a reduction like this step. That would mean this step’s difficulty is closer to the difficulty of the entire problem. Or, maybe AI systems can generate this step, but it just takes a better prompt and/or more samples. Please: I would be grateful if anyone could demonstrate that!

There’s another possibility, though, and it’s sort of the obvious one: maybe these systems are bad at proofs just because they haven’t really been trained on proofs. We’ve discussed this before. Namely, we know that their notable reasoning abilities come from being trained with reinforcement learning (RL) on math problems that have simple answers which can be easily verified. While there’s apparently some spillover from this to proof-based problems—they’re getting the easier USAMO problems, after all—as far as we know, they haven’t been trained to generate correct proofs in any particularly direct way.

Thus, even if the desired reasoning step isn’t that difficult, it’s not the sort of thing you’d be able to do without some focused training. Sure, it’s the sort of thing you’d see many times in algebra and analysis classes, but if you didn’t “pay close attention”, so to speak, you wouldn’t be able to reproduce it. If RL is the only way we know to get LLM-based systems to “pay close attention” to a kind of reasoning, then maybe this is just what we’d expect.

Improving Proving

We might thus tentatively conclude that one segment of the frontier of AI systems’ math abilities is that they don’t deeply understand how to perform even relatively standard proof steps, especially if these steps don’t naturally arise when solving math problems with simple, short-form answers. This isn’t an exotic failure: it’s just that the systems are not adept at some of the basics.

How could we make them better?

On some level, the answer must be that we need to train them on proofs. But how? Such LLM-based systems have presumably already been pre-trained on many proofs, with the typical language model objective of predicting the next token. It shouldn’t surprise us that this is insufficient to yield strong proof-writing capabilities: it wasn’t enough to get especially strong reasoning capabilities in general. As mentioned above, base LLMs needed RL to get better at reasoning.

So, here’s my superficial understanding of the relevant part of RL. The key is the “reward signal”, which says to what degree an outcome is good. The signal takes a numerical value, where higher is better. Reward signals are easy to obtain in some domains: for chess, it can just be 1/0.5/0 for win/draw/lose; for math problems with short-form answers, it can just be 1/0 for right/wrong.

It gets more complicated when outcomes are harder to evaluate. One approach is to have a reward model. For instance, you can get humans to judge some fuzzy outcome, like whether a response to a prompt is “helpful”, and then train a model—via standard supervised learning—to mimic that human judgement. The model itself can then be used to provide the reward signal in RL. This goes by the name of Reinforcement Learning from Human Feedback (RLHF) and is famously a big part of what made LLMs so successful as general-purpose assistants.

Proofs occupy an interesting middle ground. They are binary in principle—either right or wrong—but they are very hard to evaluate. This is because proofs are only sketches: they attempt to convince a mathematical audience that all the precise details could, in principle, be filled in. Flaws can be subtle, and omitted details are not necessarily fatal.

How could we get a reward signal about proofs which we could use in RL? We could try something RLHF-esque: the human judgement would be whether a qualified human says a proof is correct or not, we could train a model to mimic that human judgement, and we could use that model as the reward model in RL.

Can’t Hack It

Would this work? It’s sort of the obvious thing to try, so I assume the big companies have tried it. So, whatever scale and sophistication they have tried only yields the limited competence that we observe: not great.

That matches my intuition, anyway. Given how subtle the difference can be between a correct proof and an incorrect one, I think it’s hard to imagine a reward model getting a particularly clear picture of the domain. In RL there’s a problem known as “reward hacking”, where the model being trained with RL exploits quirks in the reward model to rack up reward scores, but in a way that doesn’t reflect the skill it’s intended to learn. Models trained to judge proofs just seem ripe for reward hacking.

To be fair, even humans do this sort of thing. There’s a whole Wikipedia article on proof by intimidation, a tongue-in-cheek term for bluffing through a proof “by giving an argument loaded with jargon and obscure results or by marking it as obvious or trivial”. Perhaps a reward model would believe such a bluff, and then the RL’d model would just learn to bluff. Some of the LLM-based systems I’ve used seem to have learned to do just this.

You could, of course, try harder to build a proof-judging reward model. One bit of relevant work on this is a 2023 paper from OpenAI investigated training “process-supervised reward models” (PRMs) for relatively simple, computational math problems. These models still just predict whether a final answer is right or wrong, but they are trained to do so by predicting whether each reasoning step in the chain-of-thought is right or wrong—hence the term “process”. This is in contrast with outcome-supervised reward models (ORMs), which make the same prediction but are trained only on problem/solution pairs. The OpenAI paper showed that PRMs could do better than ORMs. Below is an example of a PRM spotting an error, in red.

This is interesting to us because what the PRM is doing is very similar to judging whether the steps in a proof are correct. Unfortunately, it seems like PRMs weren’t useful even for making the current generation of reasoning models. One of the more transparent discussions of how to train such models comes from DeepSeek’s paper on R1, a model that was comparable in math abilities to OpenAI’s o1 model. When discussing what reward function they use for RL, they say (page 6 here, lightly edited):

We do not apply outcome or process reward models, because we find that the reward model may suffer from reward hacking during reinforcement learning.

So, apparently, reward hacking is a deal-breaker for reward models that predict whether solutions to short-answer math problems are correct. It seems likely that the problem is at least as bad for models that predict whether proofs are correct.

Just Gotta Hack it

One ray of hope: as I’ve discussed, Gemini 2.5 Pro seems to have a better handle on judging proofs than previous models. Thus, maybe we’ve bootstrapped our way into a position where frontier models are able to serve as decent reward models for proof-writing, at least around the current frontier of system capabilities. Maybe this can create a virtuous cycle. It’s a bit reminiscent of curriculum learning, albeit at a very large scale; I hope to discuss this more in a future post. This is probably the “just scale up” approach that I feel most optimistic about, at least for building the sort of “competence with the basics” we’re discussing.

We could also try to guide such a model with some finer-grained annotations, in the spirit of the PRM work. For instance, given a high-level outline of a solution, a reward model could perhaps be trusted to judge the presence or absence of each specified reasoning step, which could be aggregated into a reward score. Perhaps it’s not so easy to bluff your way around the “n=k+1” reduction step: if you manage to produce even just those 5 characters, you’re pretty close to the right idea; if you don’t, you’re pretty obviously far from it.

Humans already make these sorts of solution outlines all the time: it’s the standard way to grade not just math competitions but also math homework. “1 point for the n=k+1 reduction; 2 points for showing two adjacent coefficients are zero” and so on. I don’t know if it would be cost-prohibitive to create enough of these solution outlines, but “just scale up” approaches are never that cheap.

A model trained on this data may still sound pretty reward-hackable. But, perhaps another ray of hope: I’ve noticed that, when given broad hints about what steps to take, the models are often able to work out the details. For instance, returning to the initial problem of this post, if I explicitly ask o4-mini-high whether the desired first step makes sense, it explains correctly, albeit verbosely, why it does.

The hope here would be that you can get one part of the reward model to incentivize coming up with roughly the right steps, and get it to play nice with the part of the reward model that is currently incentivizing putting together a correct proof given only an outline.

To be clear, like most research ideas, this probably wouldn’t pan out—but maybe worth a shot. Maybe anything plausible is worth a shot here: I’m impatient for these models to get a mastery of the basics. Then the real fun can start.

This being an elite competition, difficulty is relative: most people cannot solve any of these problems, at least, not without significant training. Still, some are easier than others.

Smart post and the research idea seems good to me. Only thought I had is that they're aiming for models that are generally pleasing to many audiences. Maybe reward training on proofs make their outputs in other domains less compelling?

Imagine the equivalent of this reasoning – "Assume for the sake of contradiction that P has n real roots. If n>k+1, we just need to consider a subset of these roots in the n=k+1 case to get our contradiction. Thus assume n=k+1." – being deployed when someone wants to draft an email or talk about an emotional problem. "Assume the user has already seen a psychologist with training in CBT" (Being facetious, but you get the higher level point).

I often think about how the labs talk about how the release models are fine-tuned for certain things and people should temper expectations. In particular, that OpenAI warned o3 the released model wasn't going to be as good at ARC-AGI as the preview model

I’ve been trying to get LLMs to write various sorts of proofs, and one thing that has helped is expanding the prompt with examples. This can get really big but for an agentic system like Claude Code you can say things like: “If you are thinking about a proof by induction, check file X to see examples.” Maybe what is happening is that it doesn’t really get this “reduction” idea, but with some examples it could.

In general, how many of these issues are we going to be able to afford to specially train a model to solve, and how many are we going to have to solve with cheaper techniques? (Measured by engineering time to develop, maybe not inference cost.) Prompt engineering is very cheap in developer time.